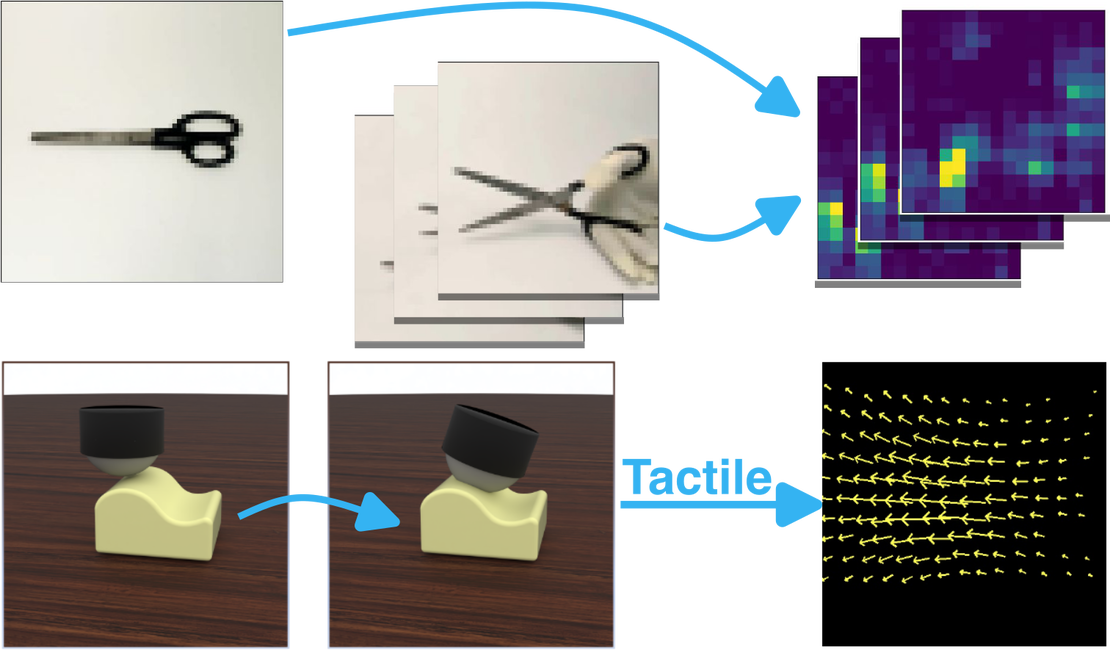

Tactile Sensing and Contact Modeling

Tactile perception and contact modeling are crucial for successful manipulation tasks, as they enable robots to understand and interact with their environment through touch. By sensing tactile information, robots can perceive object properties, detect contacts, and adjust their grasp and movement accordingly. This integration of tactile sensing and visual observation enhances robot dexterity, stability, and overall performance in contact-rich manipulation scenarios.

Read More

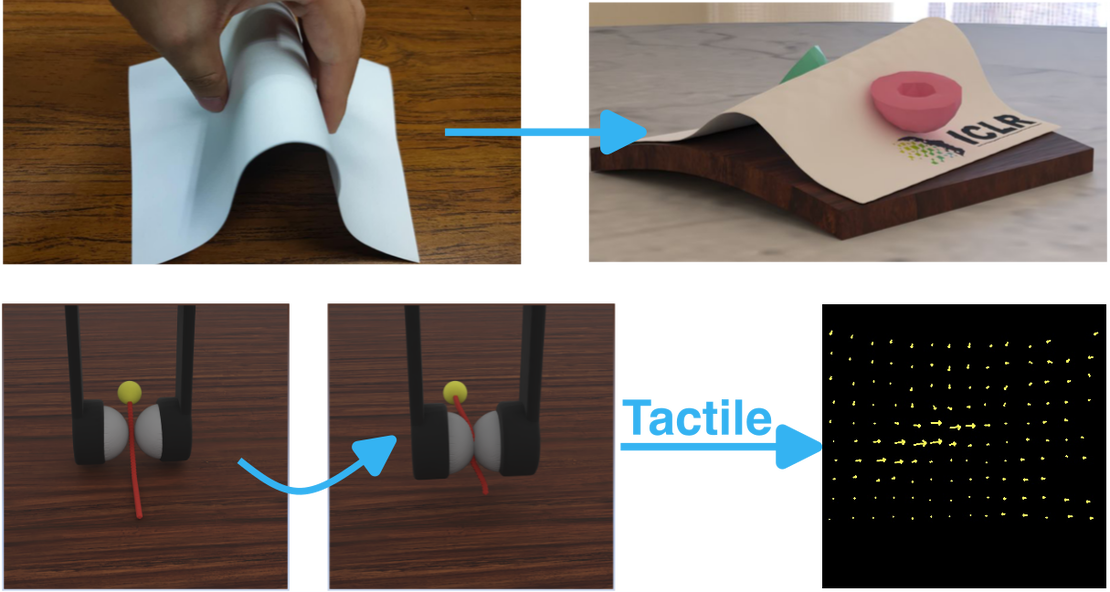

Soft Material Modeling and Manipulation

Learning control strategies to manipulate soft materials (e.g., cables, papers) is challenging and the behaviors of soft materials under contact are difficult to model. Collaborating with MIT-IBM Watson AI Lab, we presented a fully differentiable simulation platform for thin-shell object manipulation tasks (e.g., papers) and a differentiable simulation environment for vision-based tactile sensors (e.g., GelSight) for learning robotic skills efficiently in simulation.

Read More

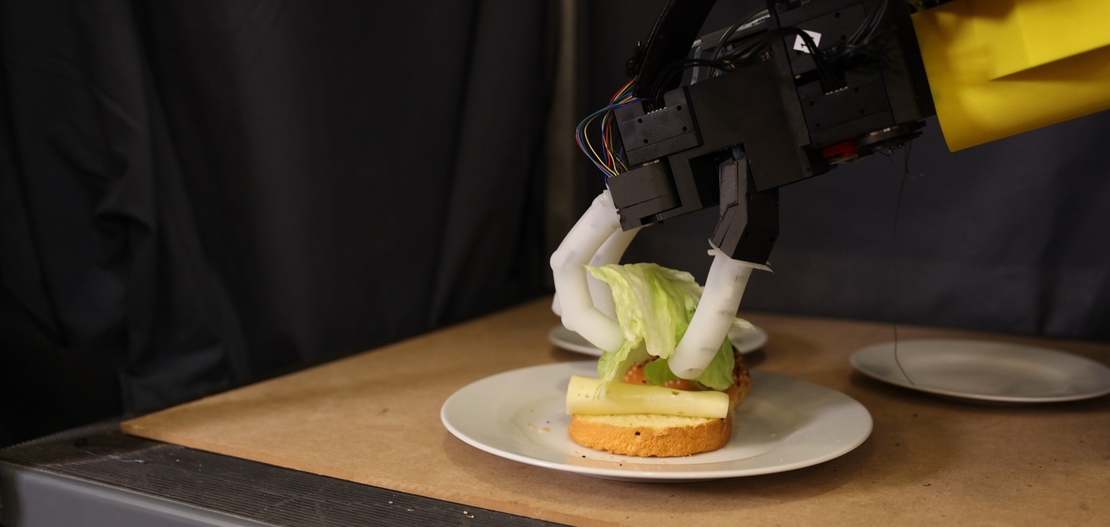

Robotic Manipulation

Our research aims to advance robotic manipulation capabilities through the development of innovative robotic hands and a comprehensive multimodal data collection platform. We have designed and fabricated two novel robotic hands: a direct 3D-printed soft robotic hand for delicate grasping, and a soft-rigid hybrid dexterous robotic hand that combines flexibility and precision. We have also established a multimodal data collection environment equipped with wearable sensors and cameras, allowing us to capture and integrate diverse sensory inputs and visual data of human behaviors. This integrated setup enables us to explore complex manipulation tasks, develop sophisticated control algorithms, and push the boundaries of robotic dexterity and autonomy.

Read More